Theorem. Let V be a vector space with an inner product < u, v >, and let W be a subspace of V. A vector w0 in W minimizes the distance || v - w || if and only if w0 satisfies the equation,The significance of this theorem is that it provides a way to actually calculate the minimizer. If W has an orthonormal basis E = {u1,..., un}, then we recall that w0 = c1u1 + ... + cnun, where ck = < w0, uk >, k = 1,..., n. By (∗), we have <v - w0, uk > = 0. Thus, <v, uk > = <w0, uk >. From this it follows that ck = <v, uk >, and that the minimizer has the explicit form

(∗) < v - w0, w > = 0,

which holds for all w in W. In addition, w0 is unique.Proof. Let's first show that if w0 in W minimizes || v - w ||, then it satisfies the normal equations. Fix u in W, ||u|| = 1, and let t ∈ R. Define

p(t) := || v - w0 + t u) ||2 = || v - w0 ||2 + 2t < v - w0, u > + t2 || u ||2 = || v - w0 ||2 + 2t < v - w0, u > + t2 = t2 + 2Bt +C. Because w0 minimizes || v - w ||2 over all w in W, the minimum of p(t) is at t = 0. This means that t = 0 is a critical point for p(t), so p'(0) = 0. Calculating p'(0) then gives us 2B = 2< v - w0, u > = 0. Diving by 2 then yields < v - w0, u > = 0. Now, for any w ∈ W, we can let u = w/||w||. Multiplying the last equation by ||w|| then gives us < v - w0, w > = 0 for all w ∈ W.Conversely, if w0 ∈ W satisfies < v - w0, w > = 0, then take w = tu in the polynomial p(t). Doing so gives p(t) = ||v - w0 ||2 +t2, because B = < v - w0, u > = 0. Again, p′(t) = 2t, and so the minimum occurs at t = 0.

To see that the minimizer is unique, suppose that there is a second minimizer, w1 ≠ w0. Both must satisfy the equation (∗), so

< v - w0, w > = 0 and < v - w1, w > = 0. Subtract the two equations to get < w0 - w1, w > = 0, which holds for all w in W. Since both minimizers are in W, which is a subspace, their difference w0 - w1 is also in W. If in the previous equation we take w = w0 - w1, then || w0 - w1 ||2 = 0. It follows that w0 - w1 = 0, and so w1 = w0, which is a contradiction. Thus there is only one minimizer

w0 = ∑k <v, uk > uk. The important feature of this formula is that we can calculate ck = < w0, uk > without knowing what w0 is. In the next sections, we will solve two least squares problems.

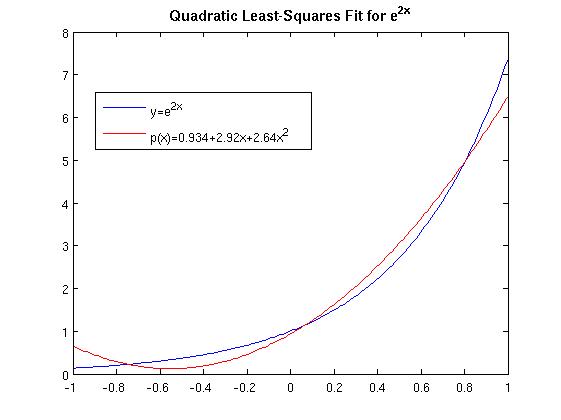

Least squares fitting of a function. We want to find the

quadratic polynomial that gives the best least least squares fit for

the function f(x) = e2x on the interval [-1,1]. In this

case, the inner product and norm are

< f , g > = ∫ −11 f(x)g(x)dx and

||f|| = (∫ −11

f(x)2dx)½.

Since we want to use quadratics, we will take W = P3. The

basis that we will use is E = {p0(x), p1(x),

p3(x)}, where

p0(x) = 2-1/2, p1(x) = (3/2)1/2x, and p2(x)= (5/8)1/2(3x2-1).

These polynomials are called normalized Legendre polynomials and they are orthonormal: <pi, pj> = δij. In this basis, the quadratic polynomial that is the best least squares fit to e2x has the formp(x) = c1p0(x) + c2p(x) + c3p2(x). From our discussion above, we have

c1 = < f, p0> = ∫

−11 e2xp0(x)dx =

8-1/2(e2 - e-2)

c2 = < f, p1> = ∫

−11 e2xp1(x)dx =

(3/32)1/2(e2 + 3e-2)

c3 = < f, p2> = ∫

−11 e2xp2(x)dx =

(5/128)1/2(e2 - 13e-2)

The quadratic polynomial that is the best least squares fit to

e2x is

p(x) = (1/4)(e2 - e-2) + (3/8)(e2 +

3e-2)x + (5/32)(e2 -

13e-2)(3x2-1).

Both the function and quadratic least squares fit are plotted below.

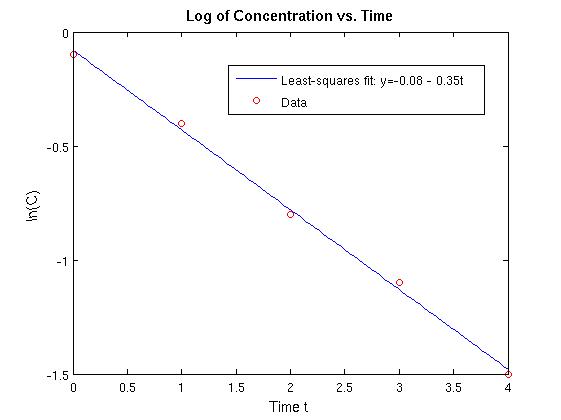

Least-squares data fitting. Problem: The table below contains data obtained by measuring the concentration of a drug in a person's blood. Find and sketch the straight line that best fits the data in the (discrete) least squares sense.

| t | 0 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|

| ln(C) | − 0.1 | − 0.4 | − 0.8 | − 1.1 | − 1.5 |

Solution. We want to find coefficients a1 and

a2 such that y = a1 + a2t is the best

least-squares straight-line fit to the data. This means that we choose

the two constants a1 and a2 so that we minimize

the sum S = (y0 − a1 +

a2·0)2 + (y1 −

a1 + a2·1)2 + ... +

(y4 − a1 +

a2·4)2. If we let

v1 = [1 1 1 1 1]T, v2 = [0 1 2 3

4]T, and yd = [-0.1 -0.4 -0.8 -1.1

-1.5]T,

then we can rewrite the sum above in terms of the inner product and

norm for R5:

S = || yd −

c1v1 -

c2v2 ||2

Next, let W = span{v1, v2}. The

minimization problem now can be put in the form discussed earlier:

Find w0 in W such that || yd − w0 || = minw ∈ W || yd − w ||.It is easy to show that if u1 =(5)−½ [1 1 1 1 1]T and u2 = (10)−½;[-2 -1 0 1 2]T, then E = {u1, u2} is an orthonormal basis for W. The idea here is to first find w0 in terms of the E basis, and then change basis to F = {v1, v2}. The reason for doing this is that a1 and a2 are the just coordinates of w0 relative to F. Relative to the E basis, w0 = < yd, u1> u1 + < yd, u2> u2 = −(1.7441 u1 + 1.1068 u2). With a little work, we see that u1 = 5−½v1 and u2 = 10−½(v2 − 2v1). Substituting these in the expression for w0 yields

w0 = −0.0800v1 − 0.35v2. From this, we get c1 = −0.08 and c2 = −0.35. The line we want is y = - 0.08 - 0.35t. The data and the line are plotted below.