Selected Projects

Processing Terrain Point Cloud Data

Processing Terrain Point Cloud Data

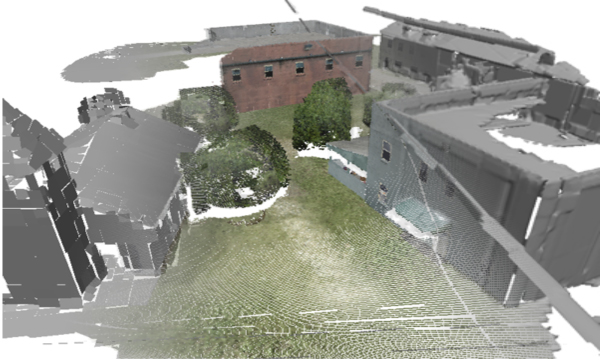

Terrain point cloud data are typically acquired through some form of LiDAR sensing. They form a rich resource that is important in a variety of applications including navigation, line of sight, and terrain visualization. Processing terrain data has not received the attention of other forms of surface reconstruction or of image processing. The goal of terrain data processing is to convert the point cloud into a succinct representation system that is amenable to the various application demands. The present paper presents a platform for terrain processing built on the following principles: (i) distortion is measured in the Hausdorff metric, which we argue is a good match for the application demands, (ii) a multiscale representation based on tree approximation using local polynomial fitting. The basic elements held in the nodes of the tree can be efficiently encoded, transmitted, visualized, and utilized for the various target applications. Several challenges emerge because of the variable resolution of the data, missing data, occlusions, and noise. Techniques to identify and handle these challenges are developed.

Point Cloud Editor

Point Cloud Editor

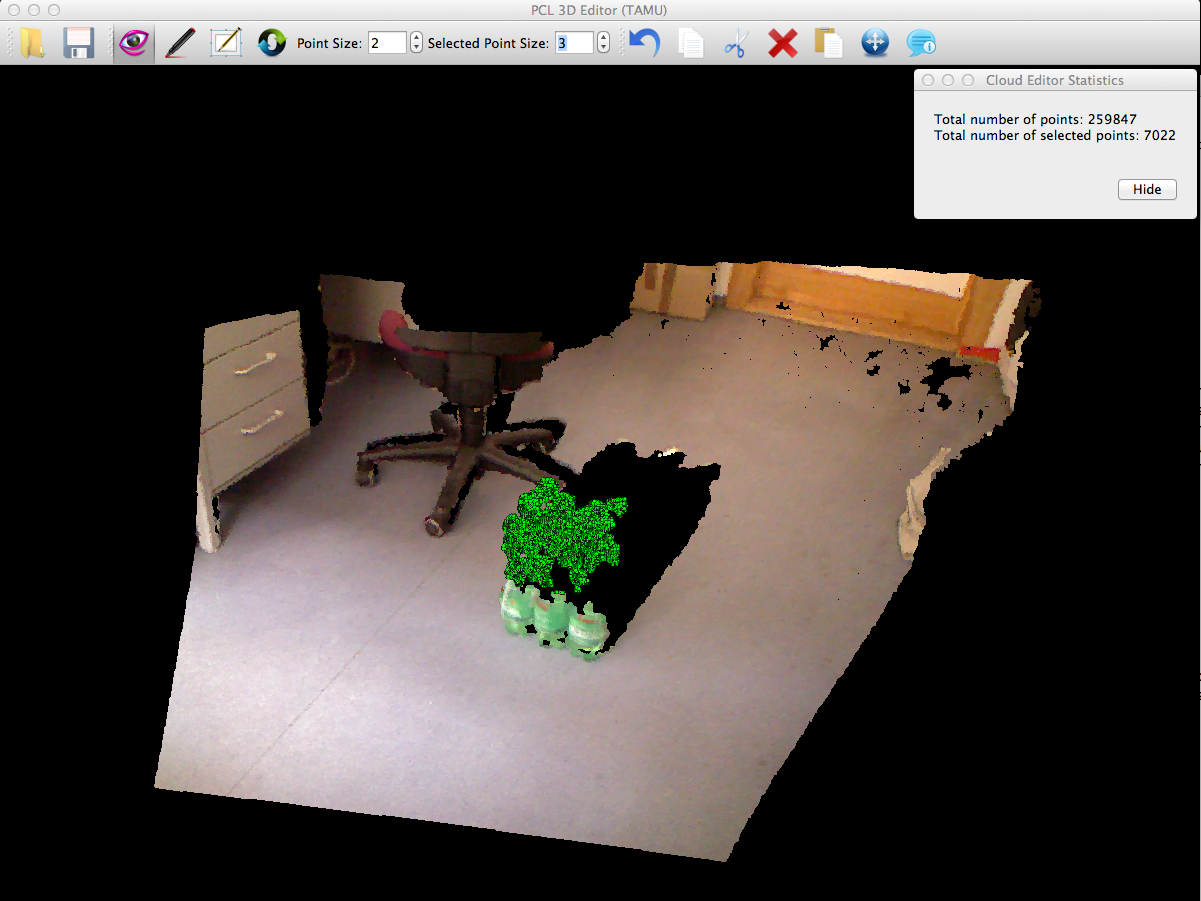

The point cloud editor was written by Yue Li and myself for use in PCL (pointclouds.org). The editor originally only supported point selection by mouse picking and 2D rubber band, as well as inversion. Points may be deleted, cut, copied and pasted. Also, the basic set of translation, rotation and scale operators were supported for clouds stored in the PCD format. The source for the editor can be found in the apps directory of the latest PCL release (starting with 1.7). The point cloud shown in the image is from the office subdirectory of the pcl data svn. Check out PointCloudLibrary on Github for the latest version and feature list.

Hyperspectral LiDAR Processing

Hyperspectral LiDAR Processing

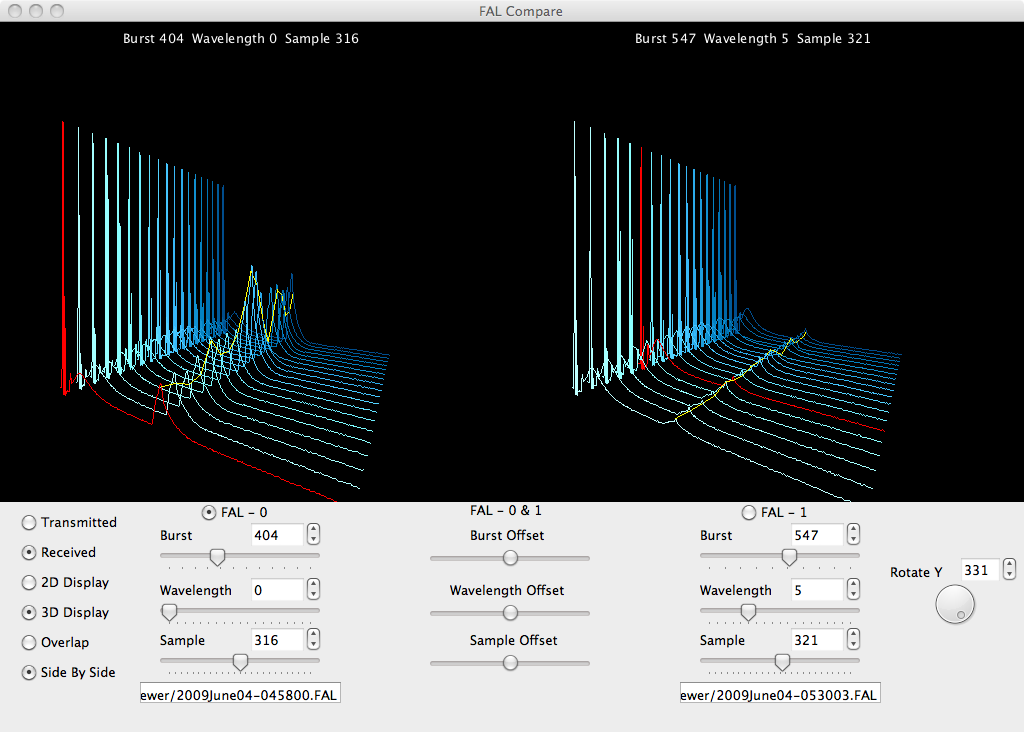

As part of an NSF/DTRA project, I developed several simple tools for the processing of hyperspectral LiDAR for the purpose of classifying optically thin aerosols. The image on the left shows a screen shot of one of the viewers that I developed for the comparison and basic analysis of the raw data. Also, along with students, Billy Clack, Aditay Kumar, Abhilasha Seth and Alok Sood we developed several additional applications to further process and classify this data.

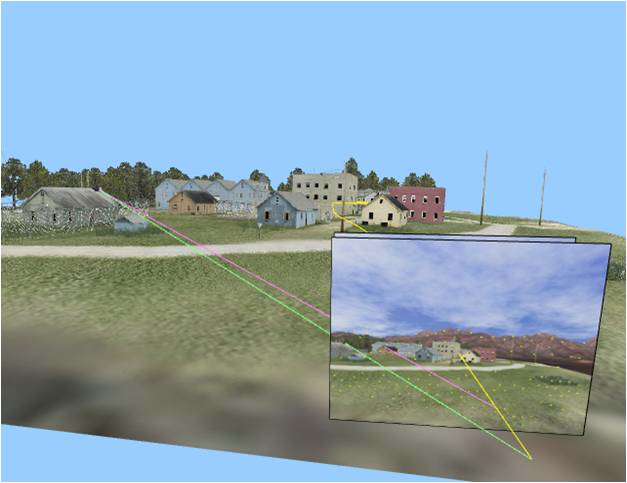

LiDAR Point Cloud Assimilation: Hybrid Surface Approximation

LiDAR Point Cloud Assimilation: Hybrid Surface Approximation

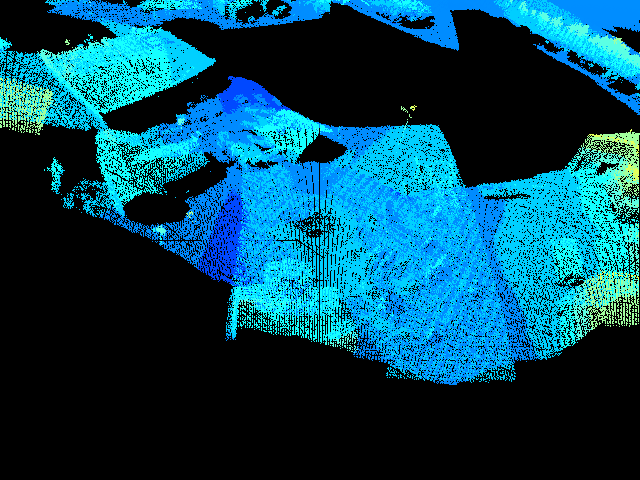

This project was part of a MURI that I contributed to while working at USC. My contributions included the generation of simulation data and environments as well as the development of software for sparse occupancy grids, adaptive tetrahedral partitions, and line of sight computations. The data in the image shown is from AFRL/MNG VEAA Data Set #1.

LiDAR Sensor Add-on for Simulator

LiDAR Sensor Add-on for Simulator

Developed a simple library that allows for lidar-like sensors to be configured and placed in the simulator. This package performs a simple range computation per ray with the loaded models. Additional options exist for multi-sampling and gaussian noise. The demo video shows a simple line scanner that has been collocated with a camera. The collocation is such that each 'ray' from the lidar is projected through the center of each pixel from the middle row in each camera frame. The resulting point cloud is drawn as it is scanned and is colored by height.

Active Vision Control of Agile Autonomous Flight (AVCAAF)

Active Vision Control of Agile Autonomous Flight (AVCAAF)

Combined Mathematical Learning, Receding Horizon Control, and Structure from Motion to enable autonomous Micro-Arial Vehicle flight via vision. The implementation was put together as proof of concept and was not real-time. This work was done as part of a MURI at USC. The demo video comes from the implementation put together by Dr. Richard Prazenica and myself.

Feature Detection, Tracking and Structure From Motion

Feature Detection, Tracking and Structure From Motion

Implemented multi-resolution Harris Feature point detection and tracking. Also, implemented several SFM methods, mostly looking at the work of Dr. David Nister.

USC Simulator

USC Simulator

Developed a virtual environment for creating data sets for vision processing. The package allows for 3D models to be loaded and multiple cameras to be placed in the scene. The cameras and models can all be loaded and manipulated from Matlab while all of the rendering for the cameras occurs in an OpenGL module.

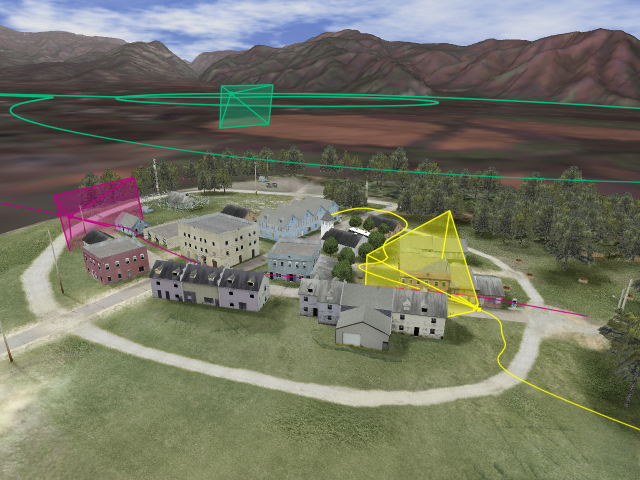

2.5D Mathematical Learning

2.5D Mathematical Learning

Used mathematical learning and ROAM to create adaptive piece-wise constant triangular meshes from point clouds. The piece-wise constant triangles were averaged at the vertices to convert the mesh to a continuous piece-wise linear mesh. This method allowed for real-time integration of millions of streaming 3D points with a fixed memory budget. A ROAM-style triangulation was incorporated so that the level of detail could be automatically adjusted according to the viewer’s location and view. The data used in the video to the left is a portion of AFRL/MNG VEAA Data Set #1. (poster)

VMD Molecule Editor

VMD Molecule Editor

VMD is a molecular visualization package that allows researchers to display, animate and analyze molecular structures. The purpose of this project was to add to VMD a simple editor that would allow for the manipulation of molecular geometries in a CAVE environment. The tools created in this project allowed for atom, bond and angle information to be easily read and modified via a 6D mouse interface. Head-tracking and stereo rendering was also utilized to provide a more natural environment for editing. I worked on this project as an undergraduate under the direction of Dr. Robert Sharpley and Dr. Daniel Dix. For more details see "Biomolecular Modeling" by Dr. Daniel Dix.